Optimising AI Training for Complex Real-World Tasks

Explore effective strategies for optimising AI training for complex and variable real-world tasks, enabling robust performance and adaptability in diverse environments.

Did you know that large language models (LLMs) need 176 billion training parameters to match human skills? This shows how hard it is to teach AI to handle the real world’s changes. As AI use grows, we must find better ways to train AI for complex tasks.

This article talks about new ways to improve AI training. We’ll look at machine learning basics and how to handle errors well. You’ll learn about the “proc3s” method, which helps AI systems plan and act safely.

A sleek, metallic robot standing at the edge of a cliff, gazing at a vast landscape filled with mountains and valleys, with a shimmering sunset in the background. The robot’s posture conveys curiosity and contemplation, while its design reflects advanced technology. Surrounding the robot are visual representations of various complex tasks, like intricate puzzles or towering structures, symbolising the challenges it faces. The atmosphere is serene yet thought-provoking, emphasising the theme of learning and understanding limits.

Key Takeaways

Unlocking the power of large language models (LLMs) to tackle complex, open-ended tasks

Exploring the “proc3s” method, a revolutionary approach to teaching AI systems environmental adaptation

Developing robust error-handling mechanisms to ensure AI agents can navigate unpredictable real-world scenarios

Implementing adaptive learning strategies to optimise AI training for variable and dynamic environments

Integrating real-time feedback systems to enhance the performance and ethical considerations of AI development

Understanding the Fundamentals of AI Training Optimization

Artificial intelligence (AI) is growing fast. Making the training process better is key to creating strong AI systems. This involves understanding machine learning architecture, designing training pipelines, and measuring performance.

Core Principles of Machine Learning Architecture

Good AI training starts with a strong machine-learning foundation. It’s about picking the right algorithms and model structures. This helps AI systems learn and adapt well.

Key Components of Training Pipeline Design

The training pipeline is vital for AI systems. It turns raw data into useful insights. It’s important to manage data collection, preprocessing, and model fine-tuning well. This is especially true in healthcare, where AI-driven health models face many challenges.

Essential Performance Metrics and Benchmarks

Checking how well an AI system works is crucial. Metrics like accuracy and F1 score help find areas to improve. This ensures AI meets AI health care standards. Setting benchmarks and tracking progress helps make AI better over time.

“The use of AI in healthcare is a double-edged sword — it holds immense promise but also comes with significant risks if not developed and deployed responsibly. Rigorous testing, transparency, and ethical oversight are critical to ensuring AI-driven health models truly benefit patients and society.”

- Dr Sarah Cen, Equality AI researcher

Understanding AI training optimisation helps make AI systems better. They become more accurate and follow ethical rules. This approach is key to making AI a game-changer in healthcare and more.

Advanced Techniques for Teaching AI Systems Environmental Adaptation

In AI development, a big challenge is making AI models adapt well to different real-world settings. Researchers have found a new way to improve AI’s adaptability and fix bias in training.

This method focuses on picking and removing training examples that cause model failures but don’t hurt overall performance. Choosing the right training data cuts down AI model bias. This leads to more precise and dependable predictions.

The method starts with a detailed look at the training data. It finds examples that cause model errors or biases. Then, these examples are removed from the training set. This helps the model learn to work well in many different situations.

This new technique uses advanced data analysis and model optimisation to reduce bias in AI models and keep accuracy high.

By removing training examples that cause bias, the model’s ability to adapt to various environmental factors improves a lot.

The result is an AI system that can handle complex, real-world scenarios better. This makes AI applications more reliable and trustworthy.

This innovative approach opens up new possibilities for AI to adapt to changing environments. It helps AI models succeed in the dynamic landscapes of today’s world.

Optimising AI Training for Complex and Variable Real-world Tasks

Artificial intelligence (AI) is now key in many fields. It’s crucial to make sure AI trains well for real-world tasks. We’ll look at how to handle errors, adapt to new situations, and manage resources during training.

Implementing Robust Error Handling Mechanisms

AI needs strong error handling for complex tasks. It must deal with issues like political bias in AI reward models and data problems. Good error detection and fixing help AI stay reliable, even in tough situations.

Developing Adaptive Learning Strategies

AI must learn and adapt to changing tasks. Adaptive learning strategies help models update their skills with new data. This includes methods like transfer learning and meta-learning, making AI better for complex tasks.

Managing Resource Allocation During Training

Training AI well means managing resources like computer power and memory. Smart resource use makes training faster and cheaper. Techniques like AI explaining predictions in simple terms help, too.

Improving AI training tackles big challenges. It makes AI more reliable and effective in many areas. This is crucial for AI’s role in our world.

Integrating Real-Time Feedback Systems in AI Training

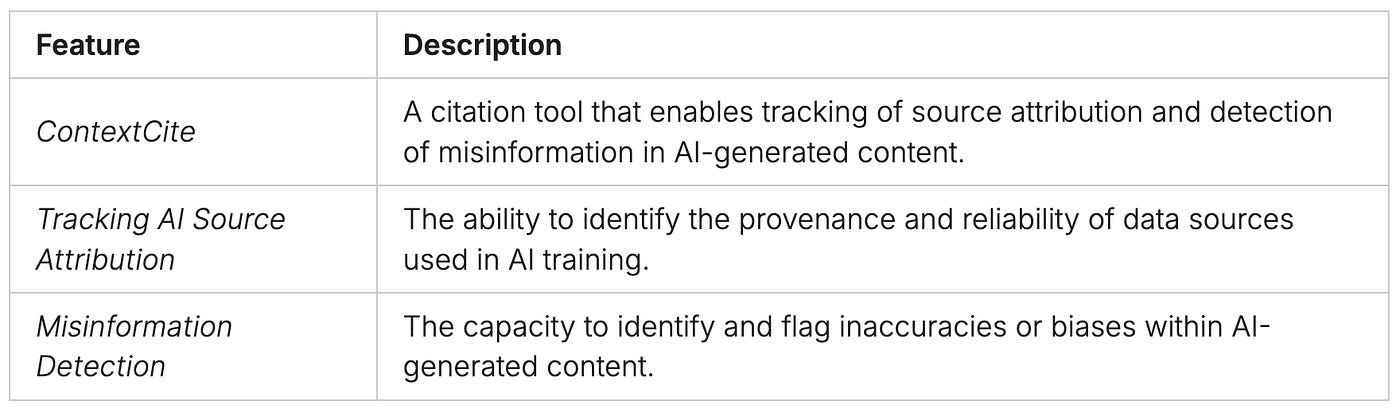

AI systems are getting smarter and more important in our digital world. They need strong feedback mechanisms to improve. ContextCite is a new tool that helps track where information comes from and spots fake news in AI content.

Using ContextCite in AI training makes AI more open and accountable. This makes AI models better and more trustworthy for users.

Empowering AI with Contextual Awareness

ContextCite uses smart tech to check AI content’s background. It looks at data sources, finds biases, and spots wrong information.

Adding ContextCite to AI training helps improve models. They can handle tough tasks better and more accurately.

Improving AI Adaptability and Performance

Real-time feedback systems make AI more reliable and adaptable. They learn from user feedback and improve over time.

This ongoing learning makes AI stronger and more versatile. It can handle complex tasks with accuracy and misinformation detection.

A futuristic control room filled with advanced screens displaying real-time data analysis, an AI neural network visual representation in the centre, colour-coded feedback loops connecting various elements, glowing circuits and holographic interfaces, all in a sleek, high-tech environment emphasising collaboration between AI and human operators.

Real-time feedback systems are key to AI’s future. They help make AI better, more reliable, and ready for complex challenges.

Addressing Bias and Ethical Considerations in AI Development

AI is growing fast, and we must tackle bias and ethics in its development. It’s key to make AI fair, accountable, and clear. This is important as AI touches our lives, from green cars to 3D models.

Methods for Identifying and Mitigating Training Bias

Bias can sneak into AI’s training data and algorithms. To fight this, developers need strong methods to find and fix bias. They should use diverse data, check for biases, and watch model outputs for unfairness.

Implementing Ethical Guidelines in AI Training

AI teams must set clear ethical rules for their work. They should focus on fairness, openness, and responsibility. Thinking about how AI affects society is also crucial. This way, AI can meet community values, whether in green cars or AI data.

Ensuring Transparency in Learning Processes

Being open is key to ethical AI. It helps us understand how AI makes decisions. Developers should explain their data, algorithms, and logic clearly. This builds trust and accountability with users.

As AI spreads across industries, tackling bias and ethics is vital. By using strong methods, setting ethical rules, and being open, AI can help everyone. This way, AI can make our future better for all.

Conclusion

Optimising AI training for real-world tasks is complex. It involves understanding machine learning and designing training pipelines. It also means using advanced techniques for adapting to environments.

Technologies like photonic processors could make AI faster and more advanced. Researchers like Marzyeh Ghassemi are working to make AI fair and reliable, especially in healthcare. This ensures AI benefits everyone.

The future of AI training is bright, with ongoing improvements in feedback systems and learning strategies. By embracing these advancements and focusing on fairness and transparency, we can make AI solve our world’s biggest problems.

FAQ

How are researchers teaching robots their limits to complete open-ended tasks safely?

Researchers have created the “proc3s” method. It helps large language models simulate and plan. This way, robots can handle unclear requests safely.

What are the regulatory gaps in AI-driven health models and algorithms?

A study from MIT, Equality AI, and Boston University points out a need. They say we need more than just rules for AI in health care.

How can bias be reduced in AI models while improving accuracy?

A new method finds and removes examples that cause model failures. This way, AI models can be less biased without losing accuracy.

What political bias has been uncovered in certain AI language models?

MIT researchers found political bias in some AI language models. This bias exists even when they’re trained on factual data.

How are LLMs being developed to explain their predictions in plain language?

LLMs are being made to explain their predictions in simple terms. This is to build trust and make them easier to use.

How can the source attribution of AI-generated content be tracked?

A tool called “ContextCite” helps track sources in AI content. It also detects misinformation in AI-generated content.

How can open-source AI data be used to design eco-friendly cars?

MIT has released a dataset of 8,000 car designs. These designs include aerodynamic details for sustainable vehicle design.

How is generative AI improving 3D model creation?

A new technique in generative AI makes creating 3D shapes easier. Artists and engineers can now make more realistic shapes.

How can a photonic processor boost AI computation speed?

This chip uses light for deep neural network operations. It makes AI faster and more energy-efficient.

How is AI being designed to address equity and robustness challenges in health care?

Marzyeh Ghassemi is working on AI for health care. She aims to solve equity and robustness challenges.

How can AI-generated satellite images help communities prepare for storms?

A new AI tool creates realistic flood scenarios. It helps communities get ready for storms.

How are researchers improving the reliability of AI systems in variable real-world applications?

MIT researchers have found a way to make AI more reliable. They’ve developed a method for real-world use.

How are AI and human musicians collaborating for live improvisation breakthroughs?

Keyboardist Jordan Rudess works with MIT’s “jam_bot.” Together, they create live improvisations between humans and AI.

How are robot dogs being trained with generative AI simulations?

LucidSim uses AI images to teach robots parkour. Robots learn without needing real-world data.

How is AI being used to map science-art connections and suggest groundbreaking materials?

AI, developed by Markus Buehler, connects science and art. It suggests new materials for innovation.